The Danger of Deepfake: Recognize Digital Manipulation

Alina2025-12-02T16:00:43+02:00

Deepfake – from curiosity to real threat!

Deepfake technology has evolved dramatically in recent years. From digital experiments, it has evolved into sophisticated tools that can create fake, yet extremely realistic audio and video content. Using artificial intelligence (AI) and machine learning algorithms, these fakes are becoming increasingly difficult to detect. Today, the deepfake phenomenon is an alarming reality, with risks ranging from political disinformation to online blackmail, fraud, and the erosion of trust in the media.

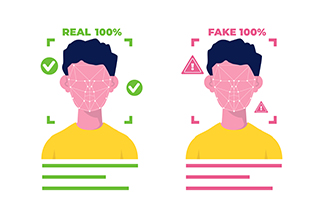

What are deepfakes and how do they work?

The term “deepfake” comes from the combination of “deep learning” and “fake.” The technology is based on generative adversarial neural networks (GANs):

- The Generator creates fake images, videos, or audio recordings.

- The Discriminator tries to identify them.

Through repetition, the generator becomes more and more efficient, obtaining content that is almost impossible to differentiate from reality.

For video, attackers use hundreds of images and video sequences with the target. For audio, a few voice samples are enough to imitate the victim's voice.

The major risks generated by deepfake in Romania

Disinformation and manipulation of public opinion

In electoral campaigns or tense social contexts, a deepfake clip of a politician can influence votes or discredit public figures.

Local example: DNSC warnings regarding the use of deepfakes in political and electoral campaigns.

Blackmail and defamation

Deepfakes are used to fabricate compromising material (including non-consensual pornography). Victims can be blackmailed or publicly exposed.

Online fraud and social engineering

An audio deepfake can imitate the voice of a CEO, colleague or family member to solicit money or sensitive data. Cases of “CEO scam” are on the rise globally.

Erosion of trust in media and institutions

The proliferation of fake news and deepfake content leads to distrust in the media and authorities. The public can no longer distinguish reality from fake.

How to recognize and protect yourself from deepfake

- Check the source and context – avoid content published by anonymous or unverified accounts.

- Analyze the technical details – imperfect synchronization, inconsistent lighting, voice with unnatural pauses.

- Use a critical spirit – if a piece of content seems too shocking, it's probably fake.

- Identify emotional manipulation – deepfakes exploit strong emotions.

- Use detection tools – Deepware Scanner, Microsoft Video Authenticator.

- Educate yourself and educate others – discussions about the risks of AI and digital fakes.

- Report suspicious content – alerts to platforms or authorities (DNSC, Romanian Police).

Examples and documentation specific to Romania

- Politicians and public figures targeted by deepfake videos.

- "Relative/boss" audio fraud with AI-imitated voices.

- Official sources: DNSC, SRI alerts, journalistic articles (HotNews, G4Media, Digi24), NGO studies (Funky Citizens, CJI).

A secure digital future depends on our vigilance

The deepfake phenomenon is one of the greatest challenges of the current digital age. Its threat to truth, trust, and personal security is real and growing. Only through digital education, critical thinking, and permanent vigilance can we limit the impact of these technologies.

For IT & C products we recommend our specialized store – Altamag.ro

Leave a reply