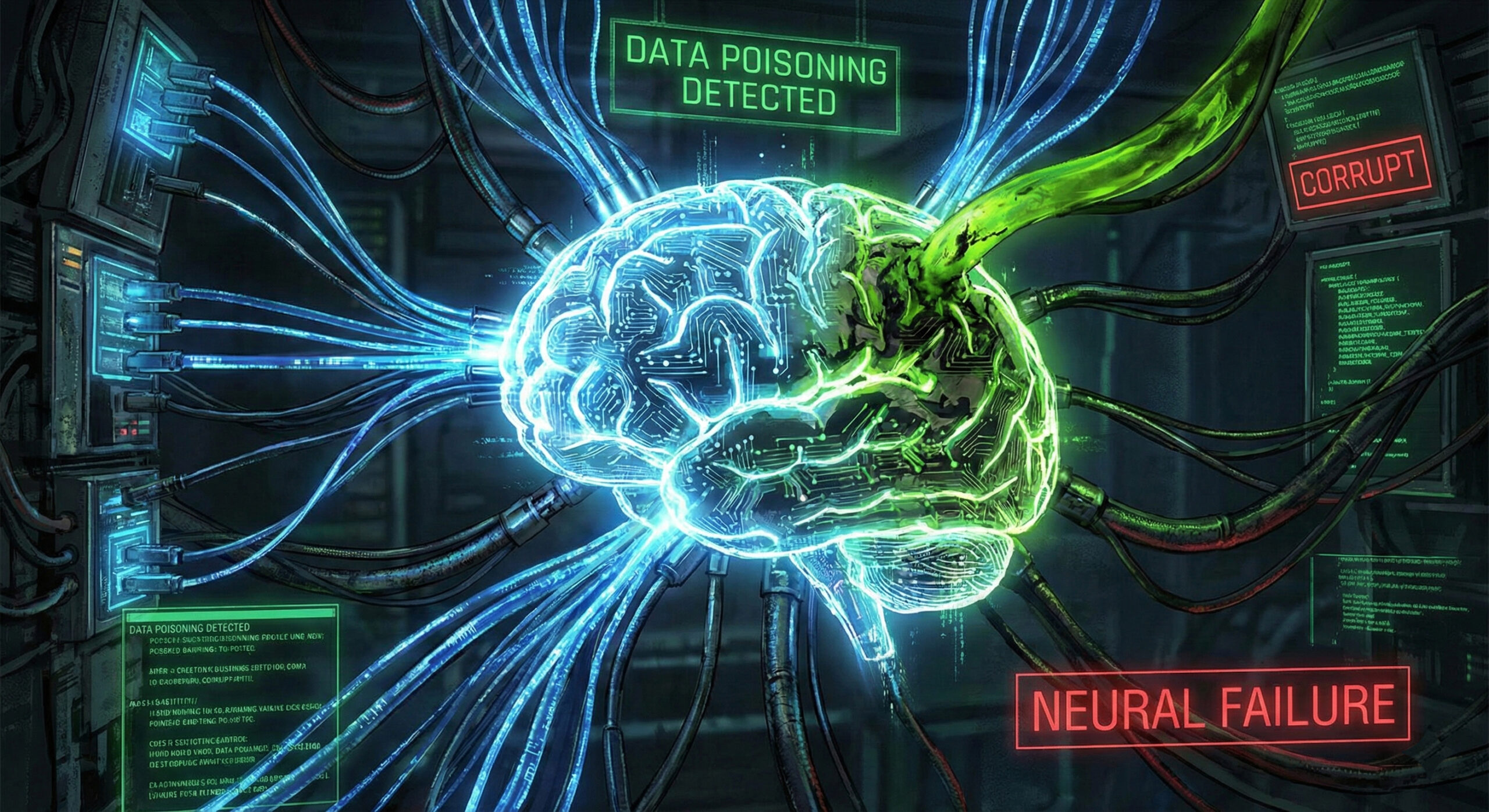

Data Poisoning: How Hackers Can "Drunk" Artificial Intelligence with Fake Data

We live in a world where Artificial Intelligence (AI) decides which emails are spam, which bank transactions are suspicious, and even what news we see on Facebook. But have you ever wondered what happens if the “teacher” teaching these robots is actually a criminal?

This attack is called Data Poisoning. In 2026, hackers understood that they did not have to destroy the robot, but only teach it wrong. At Altanet Craiova we closely follow the evolution of these technologies to know how to protect our clients.

What is Data Poisoning and how does it work?

AI systems, such as ChatGPT or antivirus filters, learn from huge amounts of data ("Machine Learning"). The process is similar to educating a child: if you show him thousands of pictures of cats and tell him "this is a cat", he will learn to recognize cats.

In a Data Poisoning attack, hackers infiltrate this learning phase. They intentionally introduce bad ("poisoned") data into the system. For example:

- Antivirus Trickery: Hackers “teach” the security AI that a certain type of dangerous file is, in fact, “safe.” So when the real virus attacks, the system opens the door to it, thinking it’s a friend.

- Spam Manipulation: Attackers send millions of emails specially designed to confuse Gmail or Yahoo's spam filter, causing it to classify dangerous messages as "Important".

Why is it a silent threat?

The worst part is that the system still works, it doesn't give errors. It seems healthy, but it makes bad decisions. It's like having a guard at the gate who has been trained to let thieves in if they wear a certain hat.

For companies, the risk is immense. If a competitor "poisons" the data of an AI that sets prices or predicts sales, that company could go bankrupt by making decisions based on compromised information.

How can we protect ourselves from a manipulated AI?

Although this type of attack is very technical, the solutions involve a lot of human attention:

- Verifying data sources: Companies should not accept data “from the internet” without verification. Cleaning the data before it is given to the robot is essential.

- Don't rely 100% on AI: Even if you use automated tools, the human factor (Human in the Loop) remains critical. If the AI tells you that a strange trade is "ok" but your gut says no, listen to your gut.

- Continuous monitoring: If a system's performance suddenly drops (for example, a lot of spam emails start coming through), it's a sign that someone has been looking at the system's "instruction manual."

For a more detailed explanation of how machine learning models are affected, you can check out the article from IBM on Data Poisoning Mechanisms.

Conclusion

Artificial Intelligence is a powerful, yet vulnerable tool. Data Poisoning reminds us that technology is only as good as the data we feed it. In 2026, the quality of information is more valuable than ever.

Does your company want to implement AI solutions or secure its existing infrastructure? Our team offers consulting and IT services adapted to new technological challenges. Visit our contact page and let's build a secure future.

This material is part of Altanet's educational series on digital security. Want to know what other risks you are exposed to this year? See Complete list of cyber threats in 2026.

Leave a reply